stable-audio-control

Editing Music with Melody and Text:

Using ControlNet for Diffusion Transformer

Siyuan Hou1,2, Shansong Liu2, Ruibin Yuan3, Wei Xue3, Ying Shan2, Mangsuo Zhao1, Chao Zhang1 1Tsinghua University

2ARC Lab, Tencent PCG

3Hong Kong University of Science and Technology

Supporting webpage for ICASSP 2025.

[Paper on ArXiv]

Abstract

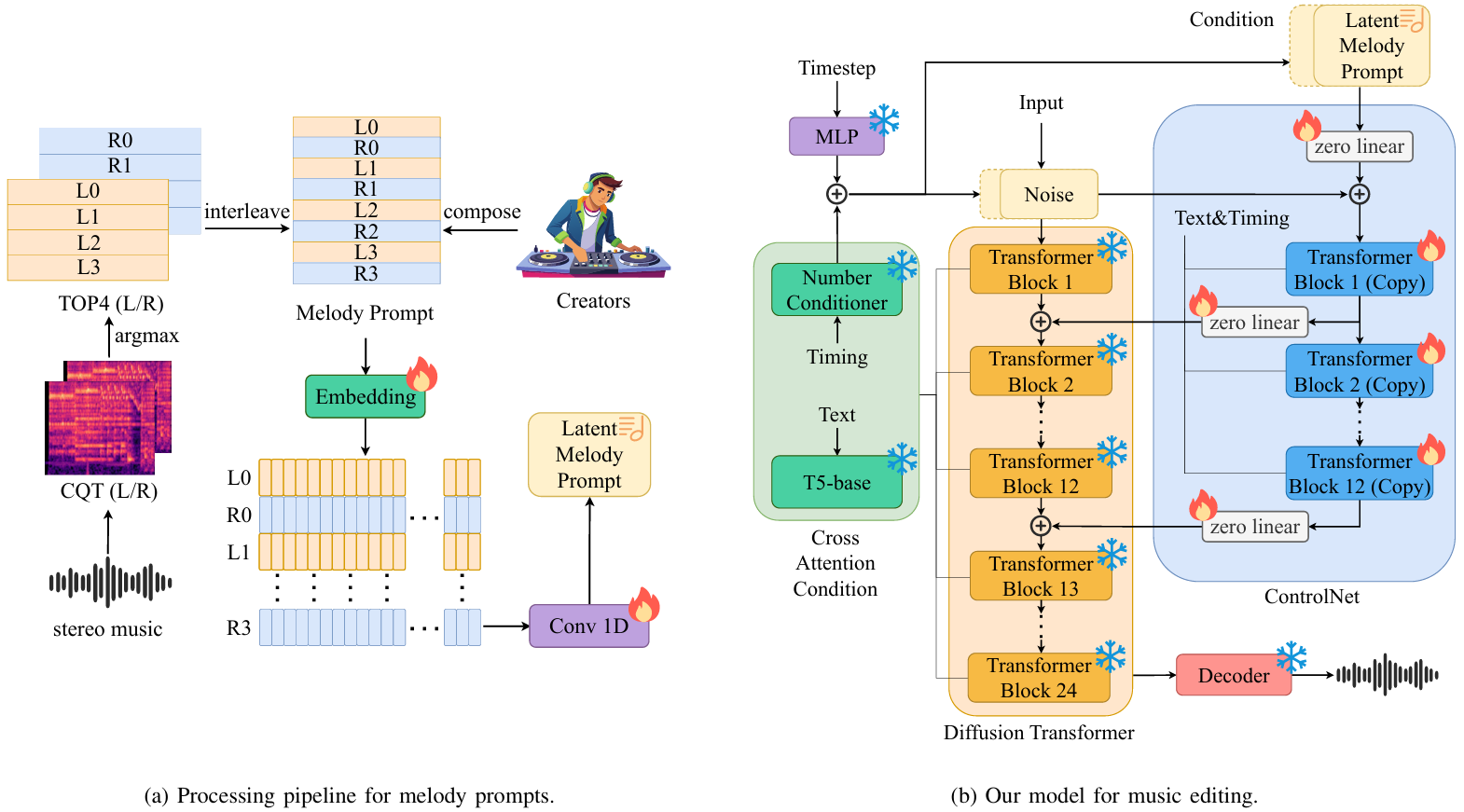

Despite the significant progress in controllable music generation and editing, challenges remain in the quality and length of generated music due to the use of Mel-spectrogram representations and UNet-based model structures. To address these limitations, we propose a novel approach using a Diffusion Transformer (DiT) augmented with an additional control branch using ControlNet. This allows for long-form and variable-length music generation and editing controlled by text and melody prompts. For more precise and fine-grained melody control, we introduce a novel top-k constant-Q Transform representation as the melody prompt, reducing ambiguity compared to previous representations (e.g., chroma), particularly for music with multiple tracks or a wide range of pitch values. To effectively balance the control signals from text and melody prompts, we adopt a curriculum learning strategy that progressively masks the melody prompt, resulting in a more stable training process. Experiments have been performed on text-to-music generation and music-style transfer tasks using open-source instrumental recording data. The results demonstrate that by extending StableAudio, a pre-trained text-controlled DiT model, our approach enables superior melody-controlled editing while retaining good text-to-music generation performance. These results outperform a strong MusicGen baseline in terms of both text-based generation and melody preservation for editing.

[Update: Music Editing Examples]

These examples are all from the Song Describer dataset[1]. For our model, we use a text prompt and a music prompt as conditions for music editing. The text prompt is derived by randomly sampling from the original dataset and further enriched and refined, while the music prompt is the top-4 constant-Q transform (CQT) representation extracted from the input audio. This enables the model to achieve music transformation in terms of style and instrumentation. All audio samples are 47 seconds long, which is the maximum length supported by the model.

| Input Audio | Text Prompt | Generated Audio |

|---|---|---|

| A heartfelt, warm acoustic guitar performance, evoking a sense of tenderness and deep emotion, with a melody that truly resonates and touches the heart. | ||

| A vibrant MIDI electronic composition with a hopeful and optimistic vibe. | ||

| This track composed of electronic instruments gives a sense of opening and clearness. | ||

| This track composed of electronic instruments gives a sense of opening and clearness. | ||

| Hopeful instrumental with guitar being the lead and tabla used for percussion in the middle giving a feeling of going somewhere with positive outlook | ||

| A string ensemble opens the track with legato, melancholic melodies. The violins and violas play beautifully, while the cellos and bass provide harmonic support for the moving passages. The overall feel is deeply melancholic, with an emotionally stirring performance that remains harmonious and a sense of clearness. | ||

| An exceptionally harmonious string performance with a lively tempo in the first half, transitioning to a gentle and beautiful melody in the second half. It creates a warm and comforting atmosphere, featuring cellos and bass providing a solid foundation, while violins and violas showcase the main theme, all without any noise, resulting in a cohesive and serene sound. | ||

| Pop solo piano instrumental song. Simple harmony and emotional theme. Makes you feel nostalgic and wanting a cup of warm tea sitting on the couch while holding the person you love. | ||

| A whimsical string arrangement with rich layers, featuring violins as the main melody, accompanied by violas and cellos. The light, playful melody blends harmoniously, creating a sense of clarity. | ||

| An instrumental piece primarily featuring acoustic guitar, with a lively and nimble feel. The melody is bright, delivering an overall sense of joy. | ||

| A joyful saxophone performance that is smooth and cohesive, accompanied by cello. The first half features a relaxed tempo, while the second half picks up with an upbeat rhythm, creating a lively and energetic atmosphere. The overall sound is harmonious and clear, evoking feelings of happiness and vitality. | ||

| A cheerful piano performance with a smooth and flowing rhythm, evoking feelings of joy and vitality. | ||

| An instrumental piece primarily featuring piano, with a lively rhythm and cheerful melodies that evoke a sense of joyful childhood playfulness. The melodies are clear and bright. | ||

| fast and fun beat-based indie pop to set a protagonist-gets-good-at-x movie montage to. | ||

| A lively 70s style British pop song featuring drums, electric guitars, and synth violin. The instruments blend harmoniously, creating a dynamic, clean sound without any noise or clutter. | ||

| A soothing acoustic guitar song that evokes nostalgia, featuring intricate fingerpicking. The melody is both sacred and mysterious, with a rich texture. |

Music Editing

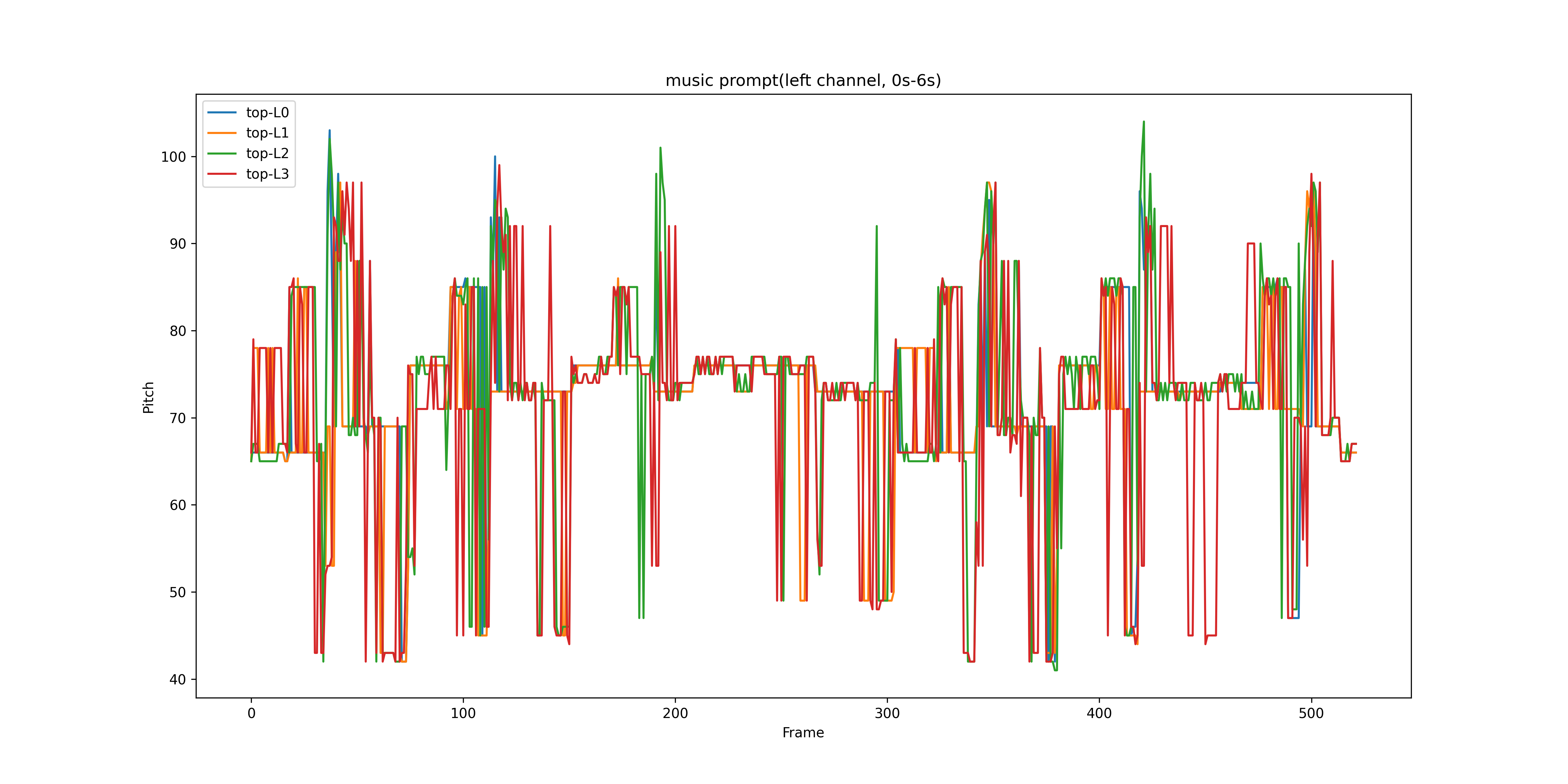

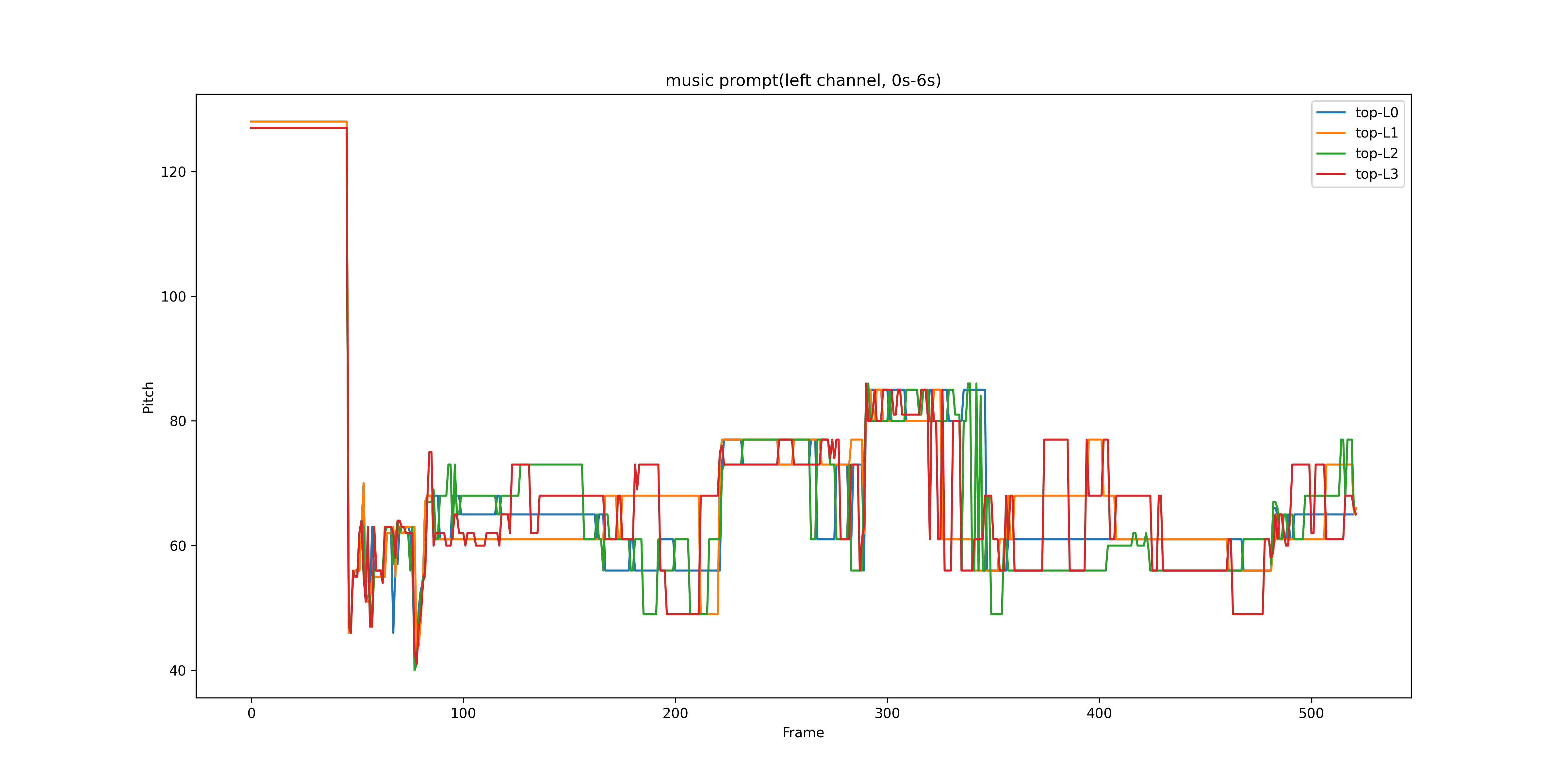

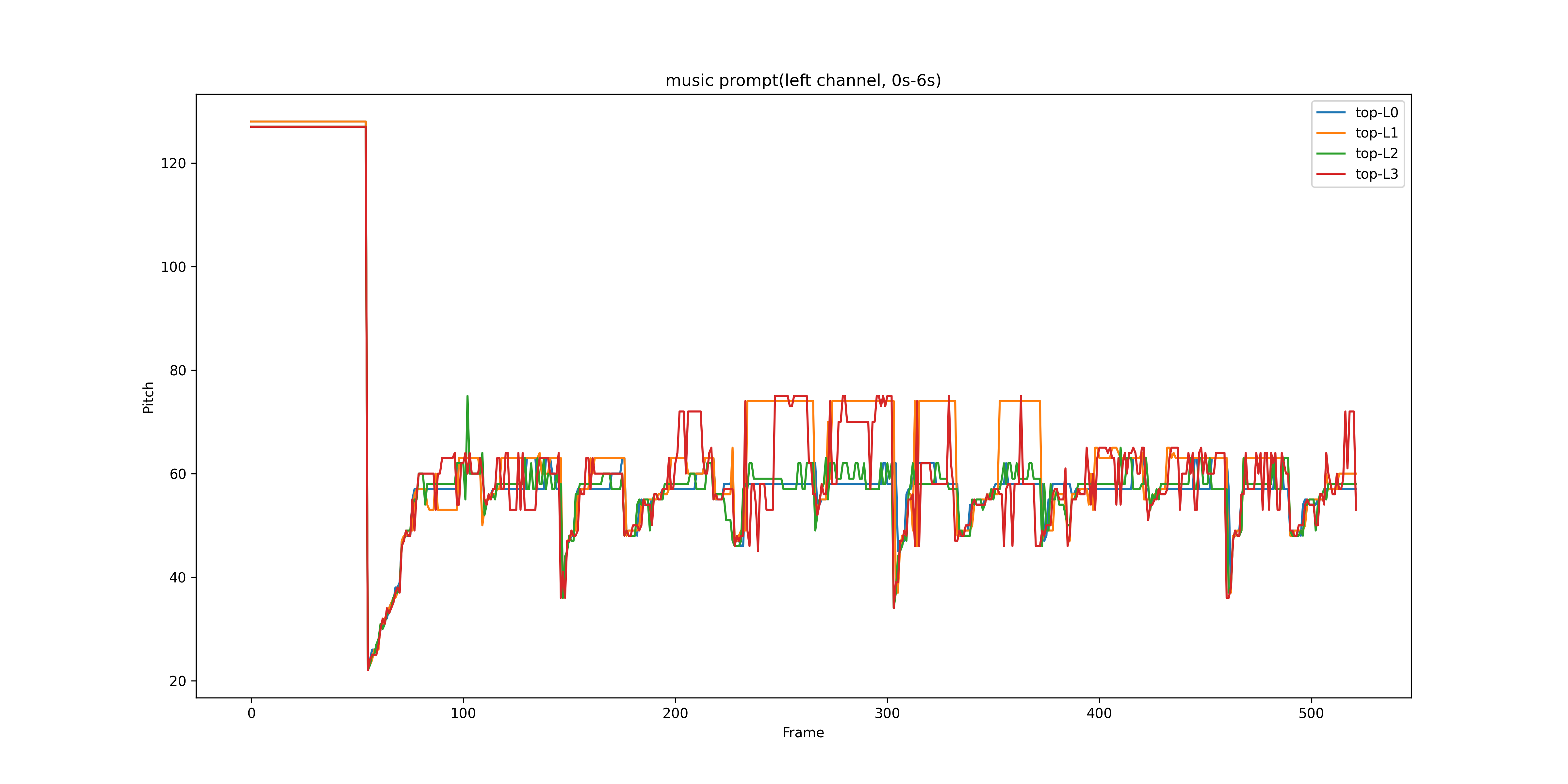

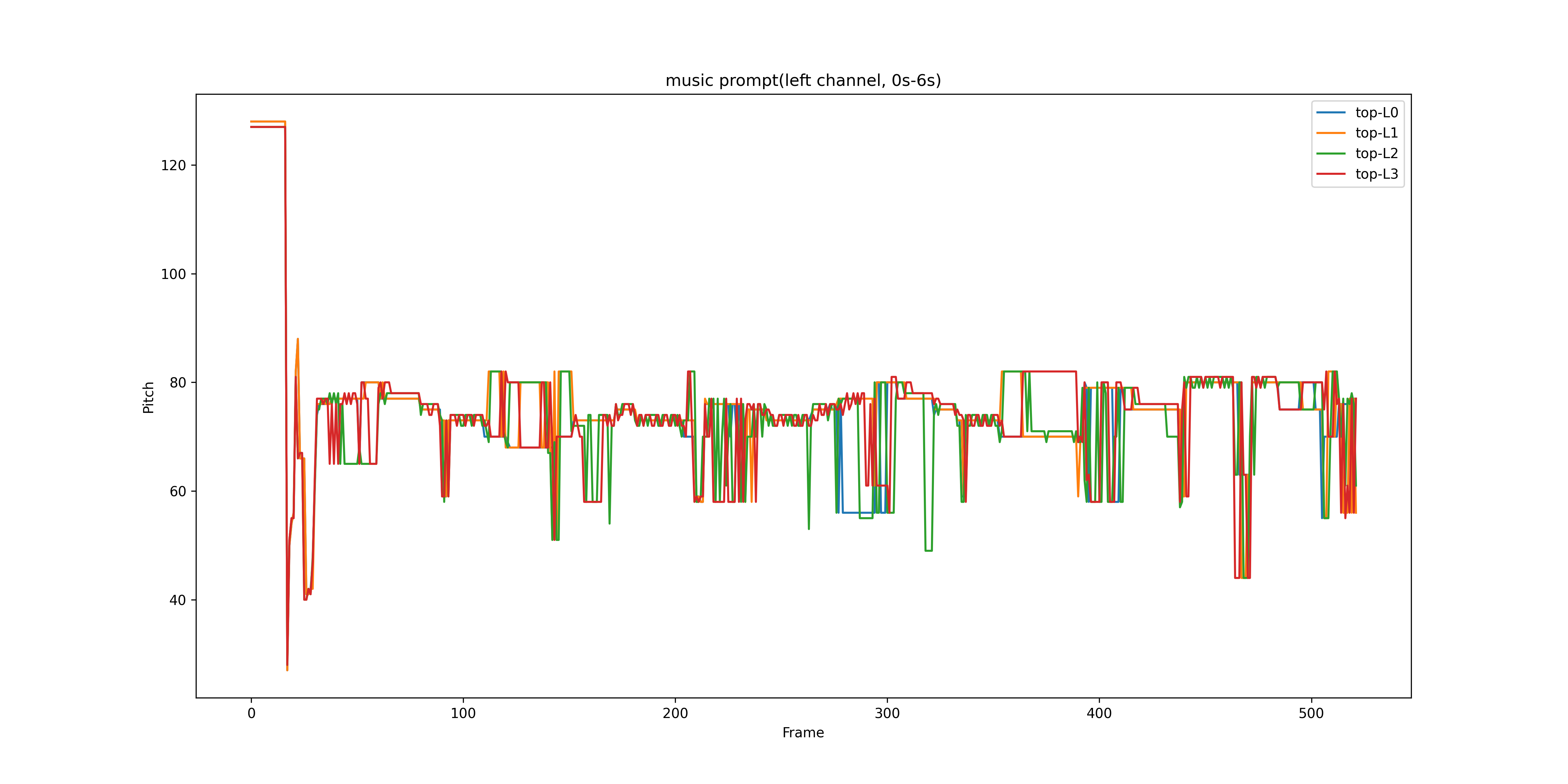

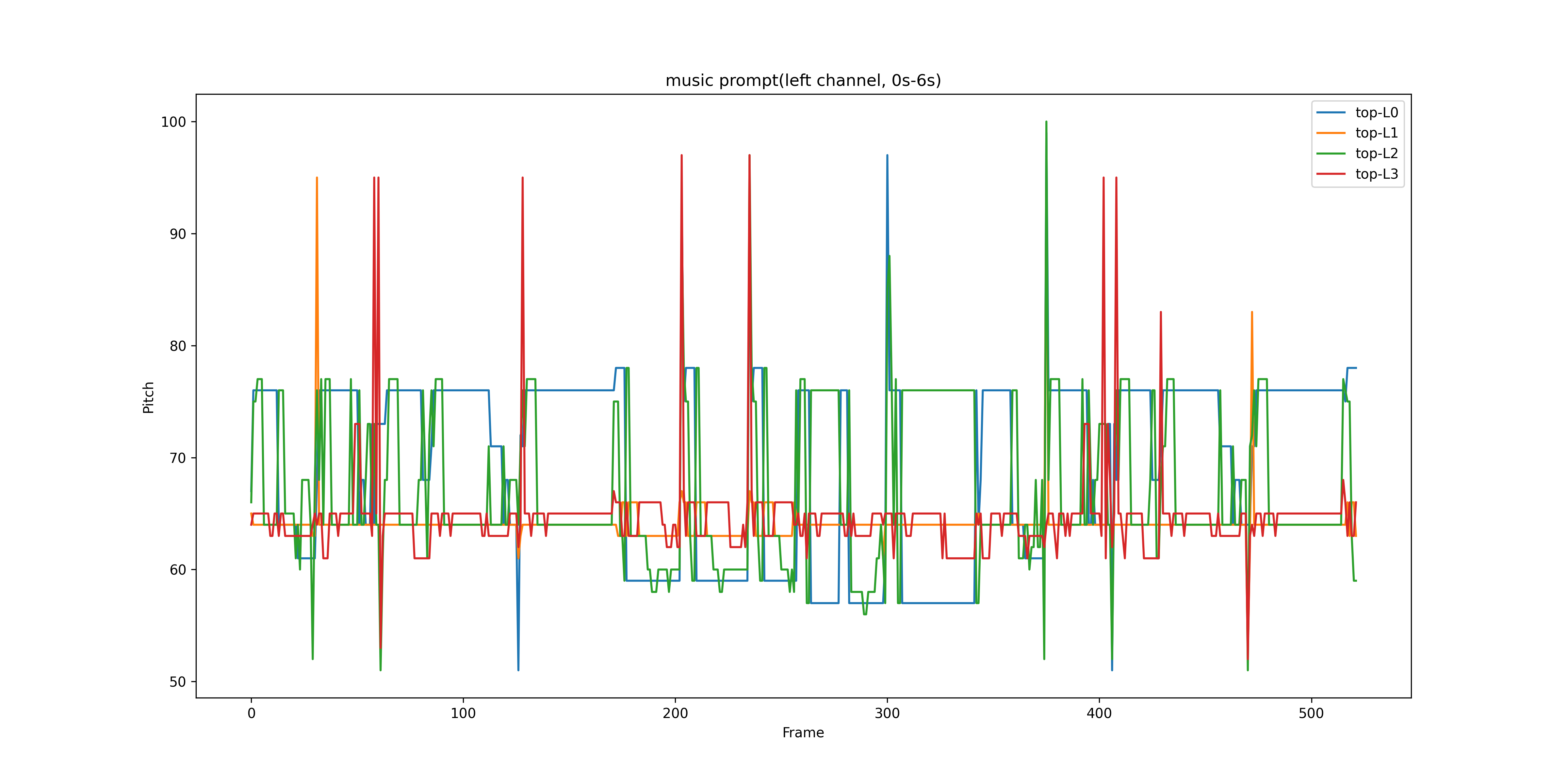

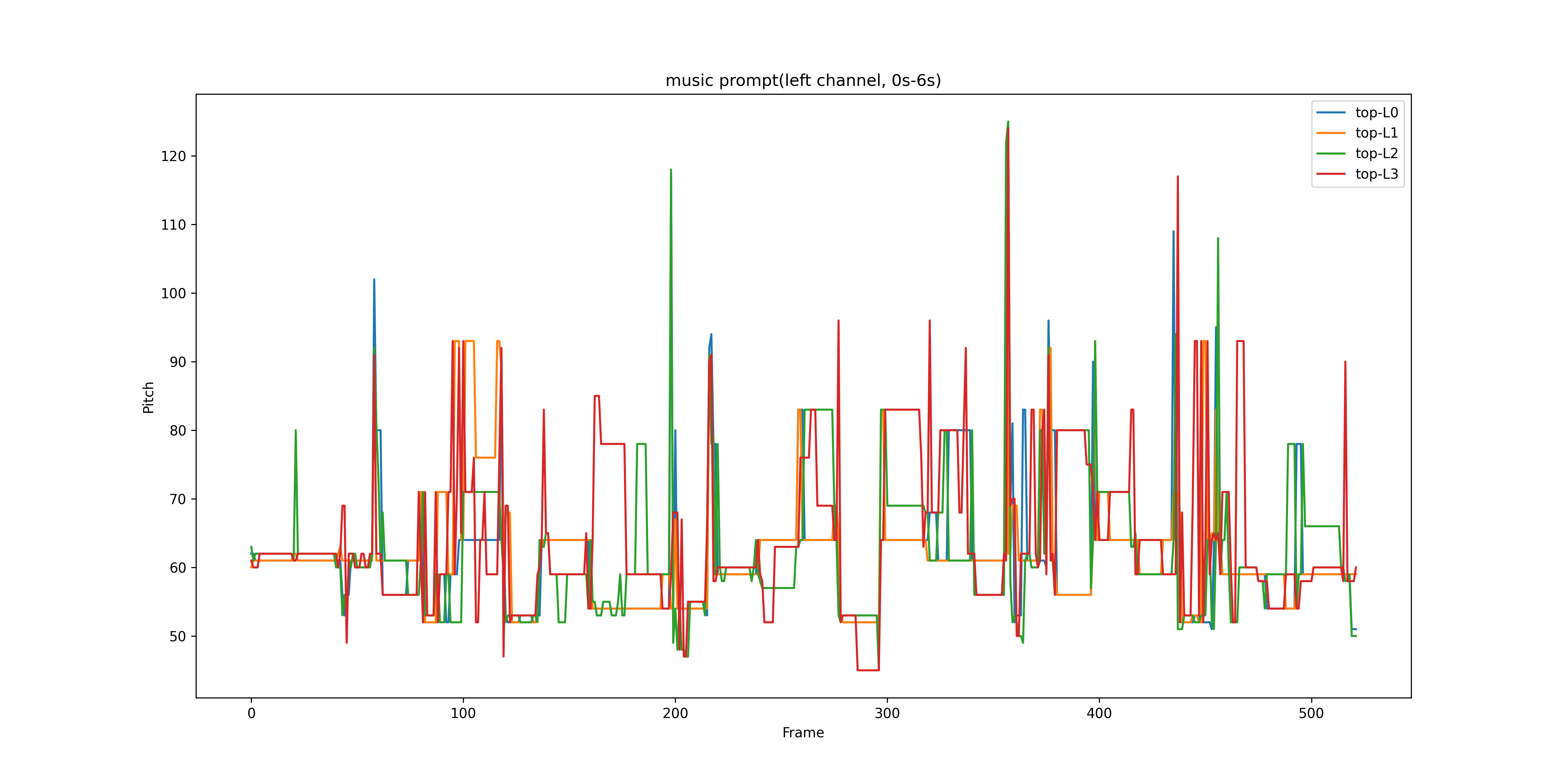

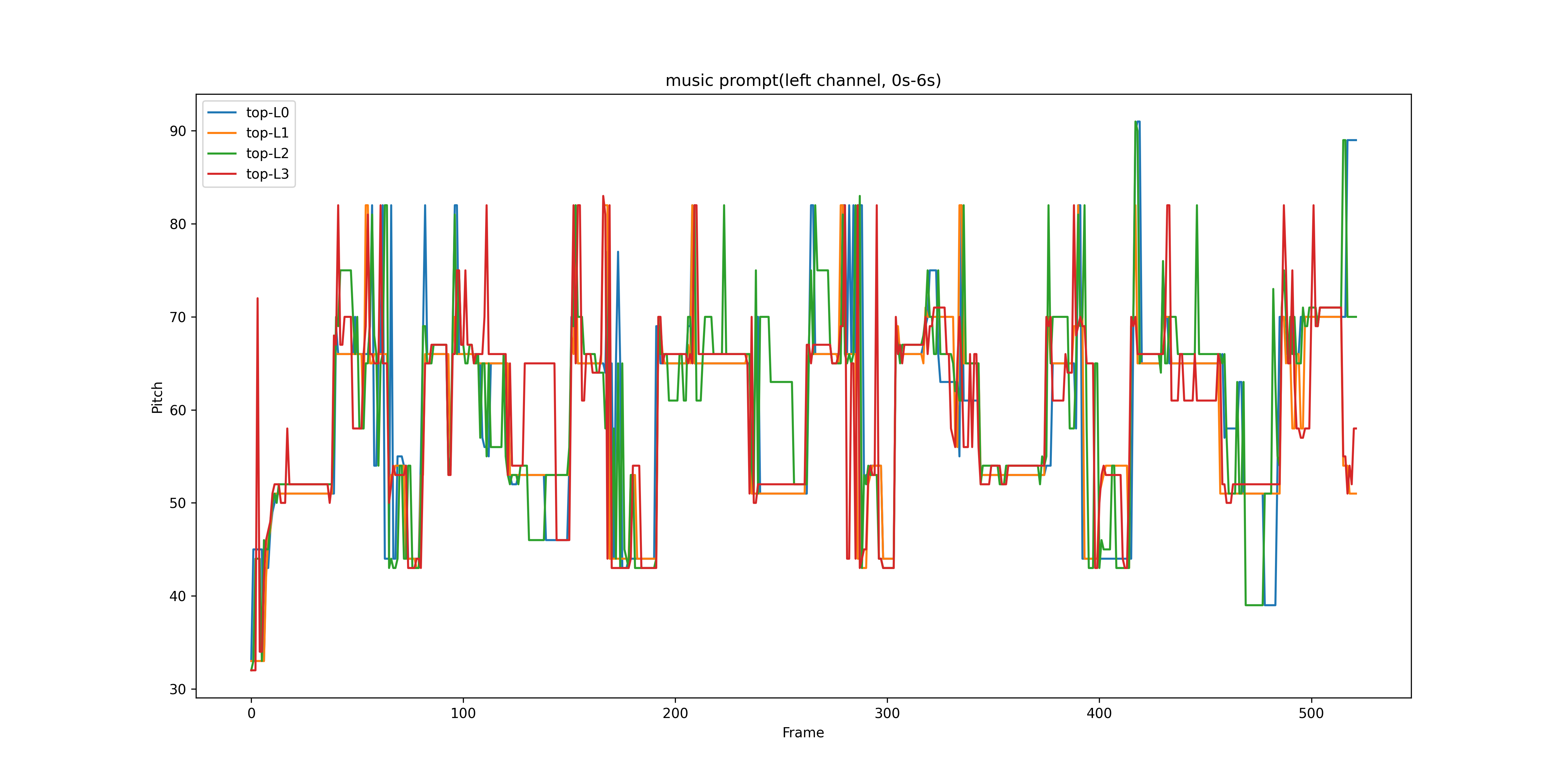

The examples of music editing task are all from the Song Describer dataset[1]. For our model, we use a text prompt and a music prompt as the conditions for music editing. The text prompt comes from the dataset, while the music prompt is the top-4 constant-Q transform (CQT) representation extracted from the target audio. The table below shows the music prompt, displaying the top-4 CQT representation of the left channel from 0 to 6 seconds. For the baseline model MusicGEN[2], the same text prompt and Chroma-based melody representation are used as conditional inputs.

Scroll to see all the results if necessary.

| text prompt | music prompt | Target | MusicGen-melody | MusicGen-melody-large | Ours |

|---|---|---|---|---|---|

| A twisty nice melody song by a slide electric guitar on top of acoustic chords later accompanied with a ukelele. |  |

||||

| 8-bit melody brings one back to the arcade saloons while keeping the desire to dance. |  |

||||

| Instrumental piano piece with a slightly classical touch and a nostalgic, bittersweet or blue mood. |  |

||||

| Positive instrumental pop song with a strong rhythm and brass section. |  |

||||

| A blues piano track that would be very well suited in a 90s sitcom. The piano occupies the whole track that has a prominent bass line as well, with a general jolly and happy feeling throughout the song. |  |

||||

| An upbeat pop instrumental track starting with synthesized piano sound, later with guitar added in, and then a saxophone-like melody line. |  |

||||

| Pop song with a classical chord progression in which all instruments join progressively, building up a richer and richer music. |  |

||||

| An instrumental world fusion track with prominent reggae elements. |  |

Text To Music

The examples for the text-to-music task also come from the Song Describer dataset[1]. For both our model and the baseline model MusicGEN[2], only the text prompt from the dataset is used as the control condition for music generation. In this case, the music prompt for our model is left empty.

Scroll to see all the results if necessary.

| text prompt | MusicGen-melody | MusicGen-melody-large | Ours |

|---|---|---|---|

| An energetic rock and roll song, accompanied by a nervous electric guitar. | |||

| A deep house track with a very clear build up, very well balanced and smooth kick-snare timbre. The glockenspiel samples seem to be the best option to aid for the smoothness of such a track, which helps 2 minutes to pass like it was nothing. A very clear and effective contrastive counterpoint structure between the bass and treble registers of keyboards and then the bass drum/snare structure is what makes this song a very good representative of house music. | |||

| A string ensemble starts of the track with legato melancholic playing. After two bars, a more instruments from the ensemble come in. Alti and violins seem to be playing melody while celli, alti and basses underpin the moving melody lines with harmonies and chords. The track feels ominous and melanchonic. Halfway through, alti switch to pizzicato, and then fade out to let the celli and basses come through with somber melodies, leaving the chords to the violins. | |||

| medium tempo ambient sounds to begin with and slow guitar plucking layering followed by an ambient rhythmic beat and then remove the layering in the opposite direction. | |||

| An instrumental surf rock track with a twist. Open charleston beat with strummed guitar and a mellow synth lead. The song is a happy cyberpunk soundtrack. | |||

| Starts like an experimental hip hop beat, transitions into an epic happy and relaxing vibe with the melody and guitar. It is an instrumental track with mostly acoustic instruments. |

References

[1] I. Manco, B. Weck, S. Doh, M. Won, Y. Zhang, D. Bogdanov, Y. Wu, K. Chen, P. Tovstogan, E. Benetos, E. Quinton, G. Fazekas, and J. Nam, “The Song Describer dataset: A corpus of audio captions for music-and-language evaluation,” in Proc. NeurIPS, New Orleans, 2023.

[2] J. Copet, F. Kreuk, I. Gat, T. Remez, D. Kant, G. Synnaeve, Y. Adi, and A. Defossez, “Simple and controllable music generation,” in Proc. NeurIPS, New Orleans, 2023.